Leveraging LangChain’s OpenAPI Toolkit enables us to build AI agents that can abstract the complexities of interacting with large APIs. By separating high-level planning from low-level execution, agents can navigate extensive API specifications more efficiently, minimizing token usage and improving the coherence of long interaction sequences.

In this hands-on tutorial, I’ll demonstrate how to create a hierarchical planning agent that leverages LangChain’s OpenAPI Toolkit to simplify API interactions and streamline complex workflows.

1. The Concepts

1.1 OpenAPI Toolkit in LangChain

OpenAPI (formerly known as Swagger) is a standard specification for defining RESTful APIs in a machine-readable format. It outlines the endpoints, request/response formats, and authentication requirements, making it easy for tools and agents to understand how to interact with an API.

The OpenAPI Toolkit enables the creation of agents that can consume arbitrary APIs defined by the OpenAPI/Swagger specification. This toolkit allows AI models to interact with external APIs in a structured, scalable way, unlocking powerful capabilities such as real-time data retrieval, third-party service interactions, and complex task automation.

Benefits of Using the OpenAPI Toolkit:

- Enables agents to dynamically explore and consume APIs without manual intervention.

- Supports real-time data integration by connecting models to external services (e.g., weather APIs, financial data, ticket booking systems).

- Improves the model’s actionability by allowing it to perform tasks that require external knowledge.

Use Cases:

- Customer Support Bots: Agents can retrieve order details, check account balances, or schedule appointments using an external API.

- Business Automation: Automatically trigger workflows, update records, or perform transactions through API endpoints.

- Dynamic Information Retrieval: Pull live data such as stock prices, weather updates, or news summaries via API calls.

1.2 What is Hierarchical Planning?

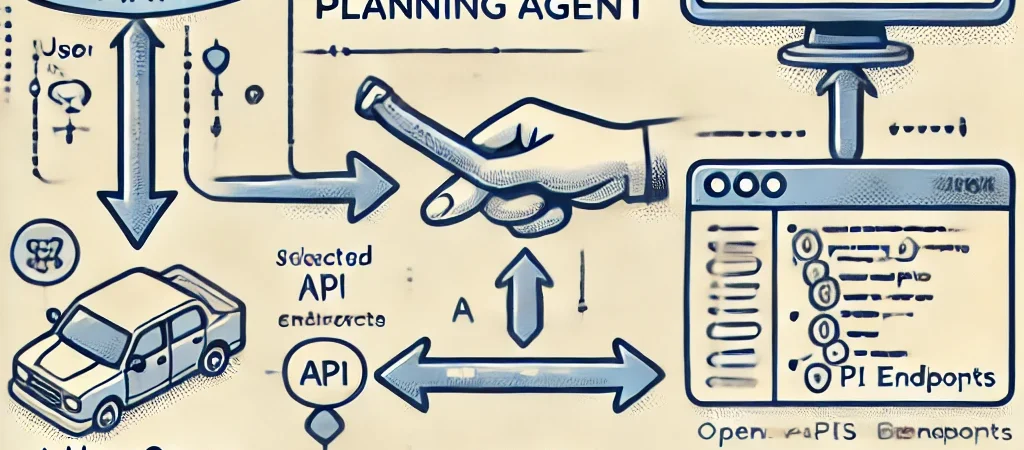

Hierarchical planning involves splitting agent tasks into two levels:

- Planner – Decides what API endpoints to call.

- Controller – Figures out how to call those endpoints and processes the responses.

1.3 Why Use Hierarchical Planning with LangChain’s OpenAPI Toolkit?

When working with large APIs, providing the model with all endpoint documentation at each step is inefficient. With LangChain’s tools architecture, we can improve efficiency by dynamically loading the relevant API documentation based on the Planner’s output.

In fact, using LangChain’s OpenAPI Toolkit, we can build both the Planner and Controller as LLM-powered components that collaborate to handle complex workflows. Here’s how the separation works:

- The Planner Tool uses a high-level summary of available API endpoints (names + descriptions) to decide the sequence of actions (based on the corresponding OpenAPI/Swagger specification).

- The Controller Tool loads the detailed OpenAPI spec for only the endpoints selected by the Planner, optimizing token usage and reducing hallucinations.

2. Step-by-Step Tutorial

Now that we have a good understanding about hierarchical planning agents and LangChain’s OpenAPI Toolkit, lets imagine a scenario where we would like to use an AI agent to identify the best flights between Lisbon and Sydney on the 5th of May of 2025.

Next you can find a comprehensive guide on setting up and using LangChain’s OpenAPI Toolkit to query flight information using natural language inputs. I’ll walk through the steps required to configure the script, authenticate with the Amadeus API (used to obtain information about flights), and interact with it using OpenAI’s GPT-4 model.

2.1 Prerequisites

- Install the the following Python packages:

- PyYAML (pip install pyyaml)

- Requests (pip install requests)

- LangChain Community (pip install langchain_community)

- LangChain OpenAI (pip install langchain_openai)

- Amadeus API Credentials:

- Create an account on Amadeus for Developers.

- Create a new application to obtain your

client_idandclient_secret. - Set the credentials as environment variables:

export AMADEUS_CLIENT_ID="your_client_id" / export AMADEUS_CLIENT_SECRET="your_client_secret" - Also set the authentication endpoint as an environment variable:

exportAMADEUS_AUTH_URL=”the_authentication_url” ( Probably with the value “https://test.api.amadeus.com/v1/security/oauth2/token”).

- OpenAPI YAML File:

- Download the latest Amadeus OpenAPI specification in YAML format (version 3.0) or simply use this one.

- Save the file with the name “amadeus_openapi.yaml”.

- OpenAI API Key:

- Sign up at OpenAI.

- Navigate to the API Keys section and generate an API key.

- Set it as an environment variable:

export OPENAI_API_KEY="your_openai_api_key"

Now you are ready to start creating your Phyton script. 🙂

2.2 Loading the OpenAPI Specification

The script starts by loading the Amadeus OpenAPI YAML file and reducing its size to retain only essential information.

import yaml

from langchain_community.agent_toolkits.openapi.spec import reduce_openapi_spec

# Load and reduce the OpenAPI spec from a YAML file

def load_openapi_spec(file_path):

with open(file_path, encoding="utf8") as f:

raw_api_spec = yaml.load(f, Loader=yaml.Loader)

return raw_api_spec, reduce_openapi_spec(raw_api_spec)

swagger_file = "amadeus_openapi.yaml"

raw_api_spec, api_spec = load_openapi_spec(swagger_file)

Explanation:

- The

yamlmodule reads the YAML file. reduce_openapi_specsimplifies the spec for easier handling.

If you decide to explore other APIs, you can find a large number of OpenAPI specs here: APIs-guru/openapi-directory

2.3 Extracting Endpoints

The script extracts available GET and POST endpoints from the OpenAPI spec.

# Extract all GET and POST endpoints from the OpenAPI spec

def get_endpoints(raw_api_spec):

return [

(route, operation)

for route, operations in raw_api_spec["paths"].items()

for operation in operations

if operation in ["get", "post"]

]

endpoints = get_endpoints(raw_api_spec)

print(f"Endpoints: {len(endpoints)}")

Explanation:

- The function iterates over the paths and operations to list all available endpoints.

- The number of endpoints is printed for verification.

2.4 Authenticating with the Amadeus API

The script constructs authentication headers by requesting an access token from the Amadeus API.

import requests

# Construct authorization headers by requesting an access token

def construct_auth_headers(auth_url, client_id, client_secret):

headers = {"Content-Type": "application/x-www-form-urlencoded"}

data = {

"grant_type": "client_credentials",

"client_id": client_id,

"client_secret": client_secret

}

response = requests.post(auth_url, headers=headers, data=data)

response.raise_for_status()

return {"Authorization": f"Bearer {response.json().get('access_token')}"}

Explanation:

- The

construct_auth_headersfunction makes aPOSTrequest to the Amadeus authentication endpoint. - It returns the

Authorizationheader containing the access token.

2.5 Initializing the Requests Wrapper

The script initializes a RequestsWrapper to manage API requests with authentication.

from langchain_community.utilities.requests import RequestsWrapper

# Initialize the RequestsWrapper with authentication headers

def initialize_requests_wrapper(client_id, client_secret):

auth_url = os.environ.get("AMADEUS_AUTH_URL", "https://test.api.amadeus.com/v1/security/oauth2/token")

headers = construct_auth_headers(auth_url, client_id, client_secret)

return RequestsWrapper(headers=headers)

requests_wrapper = initialize_requests_wrapper(client_id, client_secret)

Explanation:

initialize_requests_wrapperuses theconstruct_auth_headersfunction to create aRequestsWrapperwith the necessary headers.

2.6 Creating the OpenAPI Agent

The OpenAPI agent uses the reduced spec and requests wrapper to interpret natural language queries.

from langchain_openai import ChatOpenAI

from langchain_community.agent_toolkits.openapi import planner

# Initialize the OpenAPI agent using the provided spec and requests wrapper

# Beware of token limits associated to the model used: https://platform.openai.com/settings/organization/limits

# gpt-4 will provide a better performance, however, in Tier1, it has a token limit of 10K. gpt-4o-mini is being used, since it has a token limit of 300K in Tier1.

def initialize_agent(api_spec, requests_wrapper):

llm = ChatOpenAI(

model_name="gpt-4o-mini",

temperature=0.0,

api_key=os.environ.get("OPENAI_API_KEY")

)

return planner.create_openapi_agent(

api_spec, # The reduced OpenAPI spec that defines the API's structure and available endpoints.

requests_wrapper, # The RequestsWrapper instance that handles authenticated requests to the API.

llm, # The language model used to interpret queries and generate responses.

allow_dangerous_requests=True, # A safety flag to control whether potentially harmful requests are allowed. In this example. We must set `allow_dangerous_request=True` to enable the OpenAPI Agent to automatically use the Request Tool.

handle_parsing_errors=True # Enables the agent to gracefully handle any errors encountered during response parsing.

)

agent = initialize_agent(api_spec, requests_wrapper)

Explanation:

- The

ChatOpenAIclass initializes a GPT-4 model instance. - The

planner.create_openapi_agentfunction creates an agent capable of interpreting queries. - In this example. We must set `allow_dangerous_request=True` to enable the OpenAPI Agent to automatically use the Request Tool. This can be dangerous for calling unwanted requests. Please make sure your custom OpenAPI spec (yaml) is safe, when using this flag as true.

2.7 Querying the Amadeus API

The final step is to define a query and invoke the agent.

# Define a query to interact with the agent

query = "Find a flight from Lisbon to Sydney. The departure should happen on the 5th of 2025. Order the list of applicable flights by price, starting with the cheapest. Include all available details about each flight."

# Invoke the agent with the query and print the response

response = agent.invoke(query)

print(response)

Explanation:

- The query asks the agent to find flights based on specific criteria.

- The response is printed to the console.

2.8 Analyzing the Results obtained

This is the outcome of the agent after several iterations:

Thought: I have successfully retrieved the flight offers from Lisbon to Sydney for the specified date. The details of the available flights are as follows:

1. **Flight Offer ID: "1"**

- **Price:** 546.70 EUR

- **Duration:** 32H 15M

- **Departure:** 2025-05-05 from LIS

- **Arrival:** 2025-05-06 at SYD

2. **Flight Offer ID: "2"**

- **Price:** 546.70 EUR

- **Duration:** 32H 15M

- **Departure:** 2025-05-05 from LIS

- **Arrival:** 2025-05-06 at SYD

Both flight offers have the same price and duration. If you need more specific details or further actions, please let me know!

Final Answer: The flight offers from Lisbon to Sydney on the 5th of May 2025 are as follows:

1. Flight Offer ID: "1" - Price: 546.70 EUR, Duration: 32H 15M, Departure: 2025-05-05 from LIS, Arrival: 2025-05-06 at SYD.

2. Flight Offer ID: "2" - Price: 546.70 EUR, Duration: 32H 15M, Departure: 2025-05-05 from LIS, Arrival: 2025-05-06 at SYD.

> Finished chain.

The flight details provided are not real, as we are using a test instance of the Amadeus API. However, the agent was able to independently identify the necessary endpoints and analyze the retrieved information to achieve the specified goal.

Find the complete code for this example and other IA agents in this repository.

3. Conclusion

It becomes clear that the use of these agents in more complex scenarios, specially when involving sophisticated APIs, holds immense potential and could revolutionize the way we interact with applications.

These agents can streamline how we interact with APIs by abstracting complex request-response cycles into conversational queries. In practice, this could mean creating more dynamic customer service bots, automating business processes, or even enabling non-technical users to build workflows by describing their needs in plain language. The potential is enormous!

While the initial implementation shared in this tutorial provides a solid foundation, there are key areas that require further refinement to maximize the Toolkit’s potential:

- Improving the Planner Tool to ensure it selects the appropriate endpoints for complex queries. This can be achieved by incorporating few-shot examples that demonstrate how to handle multi-step interactions effectively.

- Enhancing the Controller to better manage unexpected API responses and handle error cases more gracefully, ensuring the agent remains robust and reliable.

- Incorporating Stateful Tools to maintain context across multiple API calls. This can be accomplished using LangChain’s memory utilities or by storing session data in a dictionary, enabling more coherent and context-aware interactions.